This is the first post of my series about understanding text datasets. A lot of the current NLP progress is made in predictive performance. But in practice, you often want and need to know, what is going on in your dataset. You may have labels that are generated from external sources and you have to understand how they relate to your text samples. You need to understand potential sources of leakage. All of these questions will be addressed in this series of tutorials.

In this post we will focus on applying topic models (Latent dirichlet allocation) to the “Quora Insincere Questions Classification” dataset on kaggle . In this competition, Kagglers will develop models that identify and flag insincere questions.

Load the data

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

plt.style.use("ggplot")

df = pd.read_csv("data/train.csv")

print(f"Loaded {df.shape[0]} samples.")

Loaded 1306122 samples.

First, let’s look at some of the questions.

print("\n".join(list(df.sample(10).question_text.values)))

If Stormy Daniels is called to testify, can Mueller void the non-disclosure agreement she has with Trump's lawyer?

Where may I comment on the new Mozilla branding?

What is the cost of hair straightening in Bangalore?

Would you leave your wife for mayantu langer?

How did scientist determine the mass of Mercury?

What should I do to become a sailor?

How do I gracefully inform a potential PhD advisor that you are choosing another’s advisor/school without burning bridges?

How did the location of Norris Bookbinding Company affect its construction?

Which career path is the hardest to earn a living in: acting, fiction writing, or illustration?

What should I choose? I want a solid career but I am torn between becoming an orthodontist or a real estate owner/manager or investor.

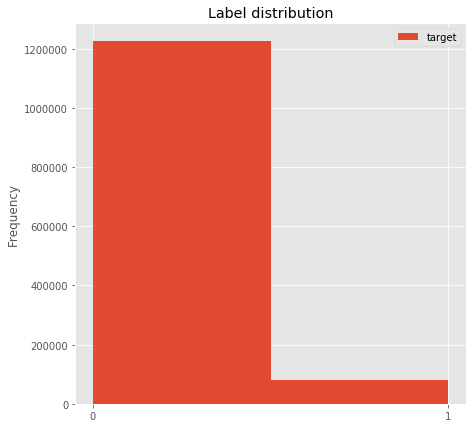

We have two labels. Obviuous sincere and insincere. Take a look at the label distribution.

ax = df.plot.hist(by="target", bins=2, figsize=(7,7), title="Label distribution", xticks=[0,1])

print("{:.1%} of the questions are insincere".format(df.target.sum()/df.shape[0]))

6.2% of the questions are insincere

We resample the data to make the topic modeling faster.

n_samples = 100000

df_sample = df.sample(n_samples)

print("{:.1%} of the sampled questions are insincere".format(df_sample.target.sum()/df_sample.shape[0]))

6.2% of the sampled questions are insincere

So regarding the labels, our sampling is faithful.

Compute the Topic model

from sklearn.feature_extraction.text import TfidfVectorizer, CountVectorizer

from sklearn.decomposition import LatentDirichletAllocation

n_features = 2000 # number of words to use

n_components = 100 # number of topics

First we extract the term frequency features for the LDA model.

tf_vectorizer = TfidfVectorizer(max_df=0.95, min_df=2, ngram_range=(1,2),

max_features=n_features,

stop_words='english')

tf = tf_vectorizer.fit_transform(df_sample.question_text)

Now we fit the LDA model.

lda = LatentDirichletAllocation(n_components=n_components, max_iter=20,

learning_method='online', random_state=2018)

lda.fit(tf)

LatentDirichletAllocation(batch_size=128, doc_topic_prior=None,

evaluate_every=-1, learning_decay=0.7,

learning_method='online', learning_offset=10.0,

max_doc_update_iter=100, max_iter=20, mean_change_tol=0.001,

n_components=100, n_jobs=1, n_topics=None, perp_tol=0.1,

random_state=2018, topic_word_prior=None,

total_samples=1000000.0, verbose=0)

Investigate the topic model

First, we take a look at the most important words in the topic model.

def print_top_words(model, feature_names, n_top_words):

for topic_idx, topic in enumerate(model.components_):

message = f"Topic #{topic_idx}: "

message += " ".join([feature_names[i]

for i in topic.argsort()[:-n_top_words - 1:-1]])

print(message)

print()

tf_feature_names = tf_vectorizer.get_feature_names()

print_top_words(lda, tf_feature_names, n_top_words=10)

Topic #0: military advantages windows pressure disadvantages track active joining employee accepted

Topic #1: views wedding channel origin therapy debt exist exercises existence explain

Topic #2: games percentage potential scientific require pick drug cope exchange executive

Topic #3: time english language american pay medical card care earn training

Topic #4: 2017 jee score marks iit courses changed board film nit

Topic #5: don working technology computer similar professional accept western apple pc

Topic #6: ve reason worst culture happy japan example blue events reaction

Topic #7: major enjoy accomplishments major accomplishments experienced exchange executive exercise exercises exist

Topic #8: best things ways website course apply android south north youtube

Topic #9: free china jobs try post looking rate instagram anti paid

Topic #10: based ideas neet coaching okay systems crack focus creative rock

Topic #11: school university high write australia high school hours credit inspired psychology

Topic #12: buy legal function consider personal clean killed manage property significance

Topic #13: think quora money college engineering important state indians worth internet

Topic #14: different types characteristics multiple platform certificate birth passport smell stage

Topic #15: say right non woman win muslim biggest civil europe population

Topic #16: days end called share case ms shouldn bond shopping 2nd

Topic #17: government president product gay com news impact numbers clear speech

Topic #18: style acting heat cure false condition florida ap exists expensive

Topic #19: love black white chinese popular society paper marriage act grow

Topic #20: indian student average germany visa 20 exams offer german features

Topic #21: children kill energy send husband nuclear cs sense daughter following

Topic #22: hate jews pakistani people loves religions note does exercise exercises

Topic #23: choose instead city 100 didn second kids week period head

Topic #24: theory amazon powerful success cities quantum heavy gone powers blame

Topic #25: america political female prevent liberals art present method source acid

Topic #26: want doing wrong child month problem mind easy close pune

Topic #27: low iq anxiety action dubai fixed exists safety equivalent entry

Topic #28: country book movie affect isn tech master illegal greatest treated

Topic #29: build tax stock plan structure basic trading engine cheap electric

Topic #30: questions guy programming reasons interview asked net narcissist especially voice

Topic #31: test rank pakistan teach seat morning elements kashmir banned candidate

Topic #32: friend question answer group daily correct coming communication nature road

Topic #33: european reach gst actual 2019 lines hope expected experienced experience

Topic #34: people night mother universe sleep middle chances healthy pass compare

Topic #35: good best way best way software companies house management effects places

Topic #36: number happens exam friends phone hair face successful oil explain

Topic #37: world history meaning considered americans religion compared islam baby french

Topic #38: age site studies 18 minimum 14 relationships match attention cloud

Topic #39: better using company create design famous ex eye lives wall

Topic #40: know common ask purpose easily season invented native equation existence

Topic #41: terms avoid fear talking son save block goes double teachers

Topic #42: got left seen differences feeling 50 choice somebody cash anybody

Topic #43: india like man countries muslims girlfriend mba information foreign negative

Topic #44: social media order increase effect blood network heart social media page

Topic #45: eat wear guys convert politics democrats sc republicans quota remain

Topic #46: process data account cause learning delhi safe main travel health

Topic #47: long come open cat break iphone view likely marry does

Topic #48: year years old big rid stay year old team price office

Topic #49: way favorite land little expensive good bad straight alcohol bike connection

Topic #50: use new books self writing air movies program sell russia

Topic #51: believe god says speak stupid spanish officers ready does experienced

Topic #52: day hyderabad near classes spend planet areas regular rent activities

Topic #53: africa thoughts review east differ suicide million century individual fish

Topic #54: person need able marketing leave services products father provide california

Topic #55: job kind war happened uk point pain international away expect

Topic #56: known join vs sun recently strength expect experiences experienced experience

Topic #57: quality videos meant exactly access established central factor expect experience

Topic #58: start human makes today earth humans run animals project preparation

Topic #59: career music bangalore develop scope store subject react singapore memory

Topic #60: stop difficult profile masters destroy highly iim drawing experience expensive

Topic #61: happen water law mass die won eating physical anime outside

Topic #62: dogs personality stories recipes gold dc bed creating identity shot

Topic #63: bad study girl look tips like boyfriend look like feel feel like

Topic #64: power mumbai formed syllabus restaurant 24 effectively added saw best

Topic #65: tv series watch web turn dark sports technical turkey afraid

Topic #66: support available code cars running approach currency wordpress payment certification

Topic #67: light speed laptop factors christians temperature disorder means suggest identify

Topic #68: work trump play role wife 15 russian realize center truth

Topic #69: 2018 10 class making admission national necessary strategy mathematics cbse

Topic #70: did facebook deal 12th meet lead laws room solution john

Topic #71: live google engineer young economy practice poor file branch eu

Topic #72: canada color gas produced cons pros pros cons reliable mistake nepal

Topic #73: students physics required math skills useful knowledge java importance analysis

Topic #74: real going tell parents talk taking treat donald donald trump trump

Topic #75: times past modern ai traditional programs historical people say wasn experienced

Topic #76: examples great colleges private ancient zero real life james built fly

Topic #77: difference really experience future current given education income bring complete

Topic #78: states value improve idea united public united states force police startup

Topic #79: used prepare effective mental hurt formula methods doctors techniques user

Topic #80: having weight british gain religious maths economics switch faster ba

Topic #81: make 12 apps star 11 email fun hand names fix

Topic #82: car family normal sound started result position attracted members sexually

Topic #83: help opinion fight area depression songs crime capital liberal foods

Topic #84: read food story advice reading rights novel global electrical drink

Topic #85: does mean does mean word dream cell crush smart comes fact

Topic #86: change usa service term short related japanese christian race france

Topic #87: possible science causes body actually degree control male model set

Topic #88: app mobile download application natural chance dating developer higher caused

Topic #89: just women men getting hard date exist skin met like

Topic #90: field options racist gate moment grade maximum points centre biology

Topic #91: cost single party contact digital chennai produce hire pictures respond

Topic #92: determine heard best book piece length velocity nazi exist existence experiences

Topic #93: feel thing girls game form video understand lot list let

Topic #94: living bank facts answers interesting replace loan meat concrete po

Topic #95: learn online type space cut thinking fake matter diet address

Topic #96: life business market development words fat growth key gift like

Topic #97: sex relationship doesn death months wants positive animal wish hot

Topic #98: true home place dog lose starting red modi army taken

Topic #99: benefits song line upsc brown construction practical protein expected experienced

Now we can look at the label distribution in each of the topics.

topics = lda.transform(tf)

for t in range(n_components):

sdf = df_sample[np.argmax(topics, axis=1)==t]

sn = sdf.shape[0]

print("topic {} ({} questions): {:.1%} of the questions are insincere".format(t, sn, sdf.target.sum()/sn))

topic 0 (4618 questions): 2.6% of the questions are insincere

topic 1 (269 questions): 3.3% of the questions are insincere

topic 2 (303 questions): 3.6% of the questions are insincere

topic 3 (1810 questions): 10.7% of the questions are insincere

topic 4 (1507 questions): 2.4% of the questions are insincere

topic 5 (784 questions): 6.1% of the questions are insincere

topic 6 (1028 questions): 3.9% of the questions are insincere

topic 7 (189 questions): 5.3% of the questions are insincere

topic 8 (1698 questions): 3.8% of the questions are insincere

topic 9 (899 questions): 7.3% of the questions are insincere

topic 10 (713 questions): 2.7% of the questions are insincere

topic 11 (1175 questions): 2.0% of the questions are insincere

topic 12 (940 questions): 4.0% of the questions are insincere

topic 13 (2178 questions): 9.1% of the questions are insincere

topic 14 (849 questions): 2.1% of the questions are insincere

topic 15 (1196 questions): 11.0% of the questions are insincere

topic 16 (560 questions): 4.1% of the questions are insincere

topic 17 (1427 questions): 12.8% of the questions are insincere

topic 18 (354 questions): 3.1% of the questions are insincere

topic 19 (1618 questions): 19.4% of the questions are insincere

topic 20 (632 questions): 4.9% of the questions are insincere

topic 21 (873 questions): 8.1% of the questions are insincere

topic 22 (372 questions): 31.7% of the questions are insincere

topic 23 (1289 questions): 5.7% of the questions are insincere

topic 24 (456 questions): 6.1% of the questions are insincere

topic 25 (952 questions): 5.5% of the questions are insincere

topic 26 (1345 questions): 7.7% of the questions are insincere

topic 27 (339 questions): 8.0% of the questions are insincere

topic 28 (949 questions): 3.0% of the questions are insincere

topic 29 (917 questions): 3.1% of the questions are insincere

topic 30 (900 questions): 4.9% of the questions are insincere

topic 31 (552 questions): 6.7% of the questions are insincere

topic 32 (1042 questions): 6.9% of the questions are insincere

topic 33 (268 questions): 4.5% of the questions are insincere

topic 34 (1220 questions): 8.3% of the questions are insincere

topic 35 (1680 questions): 1.9% of the questions are insincere

topic 36 (1667 questions): 2.2% of the questions are insincere

topic 37 (1493 questions): 10.2% of the questions are insincere

topic 38 (401 questions): 6.7% of the questions are insincere

topic 39 (1446 questions): 5.0% of the questions are insincere

topic 40 (786 questions): 6.0% of the questions are insincere

topic 41 (929 questions): 4.7% of the questions are insincere

topic 42 (638 questions): 6.4% of the questions are insincere

topic 43 (2057 questions): 11.9% of the questions are insincere

topic 44 (970 questions): 2.6% of the questions are insincere

topic 45 (548 questions): 15.5% of the questions are insincere

topic 46 (1815 questions): 4.2% of the questions are insincere

topic 47 (1445 questions): 5.9% of the questions are insincere

topic 48 (1658 questions): 7.0% of the questions are insincere

topic 49 (611 questions): 2.6% of the questions are insincere

topic 50 (1774 questions): 4.6% of the questions are insincere

topic 51 (398 questions): 20.9% of the questions are insincere

topic 52 (757 questions): 4.5% of the questions are insincere

topic 53 (581 questions): 5.5% of the questions are insincere

topic 54 (1189 questions): 4.6% of the questions are insincere

topic 55 (1095 questions): 6.1% of the questions are insincere

topic 56 (352 questions): 1.1% of the questions are insincere

topic 57 (343 questions): 2.0% of the questions are insincere

topic 58 (1207 questions): 4.6% of the questions are insincere

topic 59 (758 questions): 2.4% of the questions are insincere

topic 60 (339 questions): 6.8% of the questions are insincere

topic 61 (1304 questions): 8.6% of the questions are insincere

topic 62 (500 questions): 5.0% of the questions are insincere

topic 63 (1483 questions): 7.3% of the questions are insincere

topic 64 (427 questions): 3.0% of the questions are insincere

topic 65 (618 questions): 5.0% of the questions are insincere

topic 66 (516 questions): 2.5% of the questions are insincere

topic 67 (787 questions): 5.1% of the questions are insincere

topic 68 (1200 questions): 9.5% of the questions are insincere

topic 69 (976 questions): 4.8% of the questions are insincere

topic 70 (1621 questions): 4.3% of the questions are insincere

topic 71 (789 questions): 3.3% of the questions are insincere

topic 72 (484 questions): 3.3% of the questions are insincere

topic 73 (1136 questions): 2.9% of the questions are insincere

topic 74 (1386 questions): 16.4% of the questions are insincere

topic 75 (353 questions): 4.2% of the questions are insincere

topic 76 (915 questions): 2.6% of the questions are insincere

topic 77 (1238 questions): 2.7% of the questions are insincere

topic 78 (1509 questions): 7.9% of the questions are insincere

topic 79 (697 questions): 2.0% of the questions are insincere

topic 80 (555 questions): 3.8% of the questions are insincere

topic 81 (1285 questions): 5.8% of the questions are insincere

topic 82 (499 questions): 7.6% of the questions are insincere

topic 83 (1059 questions): 7.1% of the questions are insincere

topic 84 (912 questions): 3.3% of the questions are insincere

topic 85 (1905 questions): 3.1% of the questions are insincere

topic 86 (866 questions): 11.1% of the questions are insincere

topic 87 (1639 questions): 3.6% of the questions are insincere

topic 88 (1015 questions): 2.5% of the questions are insincere

topic 89 (950 questions): 20.6% of the questions are insincere

topic 90 (900 questions): 5.0% of the questions are insincere

topic 91 (618 questions): 3.7% of the questions are insincere

topic 92 (305 questions): 4.6% of the questions are insincere

topic 93 (1495 questions): 5.4% of the questions are insincere

topic 94 (495 questions): 2.8% of the questions are insincere

topic 95 (849 questions): 3.5% of the questions are insincere

topic 96 (813 questions): 3.1% of the questions are insincere

topic 97 (1072 questions): 9.4% of the questions are insincere

topic 98 (1287 questions): 11.9% of the questions are insincere

topic 99 (384 questions): 0.8% of the questions are insincere

So we see, there are topics, like topic 22, with a high share of insincere questions. It seems to be related with racial hate. Let’s sample questions from this topic.

df_topic_sample = df_sample[np.argmax(topics, axis=1)==22].sample(20)

print("\n".join(["{}:{}".format(l, q) for l, q in zip(df_topic_sample.target.values,

df_topic_sample.question_text.values.tolist())]))

0:Why are Jews seen as stereotypically materialistic/frugal?

0:Why does the mainstream media hate Trump so much?

1:Why do you hate Jews?

0:What would your reaction be if you suddenly get a call from the boy that you finally got over, saying he loves you and he always did?

0:What are some examples of Zoroastrian influences on Indian culture and religions?

0:Were there any Nazis who befriended Jews, or perhaps people who were friends with Jews before they became Nazis?

0:Why does media hate Trump but love Obama?

0:Do religions shape the sharp feelings?

0:Can a Pakistani open a bank account in India online?

1:Is it true that minorities don't hate white supremacy, rather that they hate not being ranked higher within it?

1:Why aren't there more books about the fact that Ottoman Jews enslaved Slavic women? I want to completely investigate this topic in order to understand why Ottoman Jews did this.

1:Why is there a Pakistani ocean for the Pakistanis?

1:When will Hindus fight back against the assault of Abrahamic religions?

0:How can I send an Anonymous note to someone on Facebook?

0:What should be a child's note to his or her parents?

1:Why do American Jews feel phony?

1:Do you think white Christians are the most racist and bigoted people on earth I mean just look at their history with Africans, Jews and now Muslims?

0:How do you separate non practicing "secular" Jews from practicing Jews like yourself? What are their main differences?

0:Does America hate equality?

0:Should anyone change himself for the person to whom he loves or has an attachment?

Also, there are topics, like topic 35, with a low share on insincere questions. This topics seems to deal with software companies in some way. Let’s see.

df_topic_sample = df_sample[np.argmax(topics, axis=1)==35].sample(20)

print("\n".join(["{}:{}".format(l, q) for l, q in zip(df_topic_sample.target.values,

df_topic_sample.question_text.values.tolist())]))

0:Is it good to do Ph. D from IITs?

0:When can I have a post-workout shake if a train just before dinner?

0:What is the best way to prevent foodborne illness?

0:Is being agnostic the best way of being logical in today's world?

0:What are exciting career choices after B.E in ECE? I have completed my B.E and I'm interested in software or management related courses to do.

0:What is the best way to explain the difference between scientific consensus and science by democracy?

0:What are your best KPI for Incident Management - Service Desk / IT support, and why?

0:Is the VFX industry improving?

0:How much should I pitch for business software to my clients in India?

0:What do you think is the best (in any way you want) cover page of a comic book?

0:What is the best way for introvert to suceed in social life?

0:What are those people doing currently who qualified GATE(CSE)?

1:How many secretly wish that the republican house members on the train that wrecked today had been in the garbage truck instead of on the train?

0:What has the PostNet company achieved in the business services industry?

0:Do software companies still keep their engineers once they turn 45-50 and get outdated from the latest advancements in technologies?

0:What kind of franchises are currently going good in the market under 7-10 lakhs?

0:What's the best way to make creme brulee?

0:What is the best way to improve knowledge?

0:What is the best way to repair Amish heaters?

0:How much would Zomato's table management software cost to build?

Hm, it is not really clear what is going on in this topic.

Topics of insincere questions

This time we compute a topic model for only the insincere questions to understand what is going on there.

tf_vectorizer = CountVectorizer(max_df=0.95, min_df=2,

max_features=n_features,

stop_words='english')

tf = tf_vectorizer.fit_transform(df[df.target==1].question_text)

lda = LatentDirichletAllocation(n_components=10, max_iter=5,

learning_method='online', random_state=2018)

lda.fit(tf)

LatentDirichletAllocation(batch_size=128, doc_topic_prior=None,

evaluate_every=-1, learning_decay=0.7,

learning_method='online', learning_offset=10.0,

max_doc_update_iter=100, max_iter=5, mean_change_tol=0.001,

n_components=10, n_jobs=1, n_topics=None, perp_tol=0.1,

random_state=2018, topic_word_prior=None,

total_samples=1000000.0, verbose=0)

Let’s look at the relevant wirds in the topics.

tf_feature_names = tf_vectorizer.get_feature_names()

print_top_words(lda, tf_feature_names, n_top_words=10)

Topic #0: did india modi muslims pakistan russia live immigrants world bjp

Topic #1: americans muslims american countries world islam new asian british western

Topic #2: country china woman america christians usa non rape liberal race

Topic #3: trump does president donald man obama old year really bad

Topic #4: feel having aren big sexual things fake boys history doing

Topic #5: people hindus think make look just religion conservatives does state

Topic #6: people indians know hate gay democrats true north wrong come

Topic #7: quora liberals sex questions children like better does kill guys

Topic #8: women men white indian black good like stop don instead

Topic #9: people like girls don chinese muslim does believe jews god

These topics look more clear and it’s easy to understand what’s happening. Obviously, some topics attract insincere questions more than others.

So I hope you learned something you can use for your own text analysis work. Stay tuned for the next post of the series about model based on named entity recognition.