Last weekend PyCon DE and PyData Berlin joined in Berlin for a great conference event that I was lucky to attend. The speaker line-up was great and often it was hard to choose which talk or tutorial to attend. I will give you an overview of the talks I liked and the respective material.

Day 1

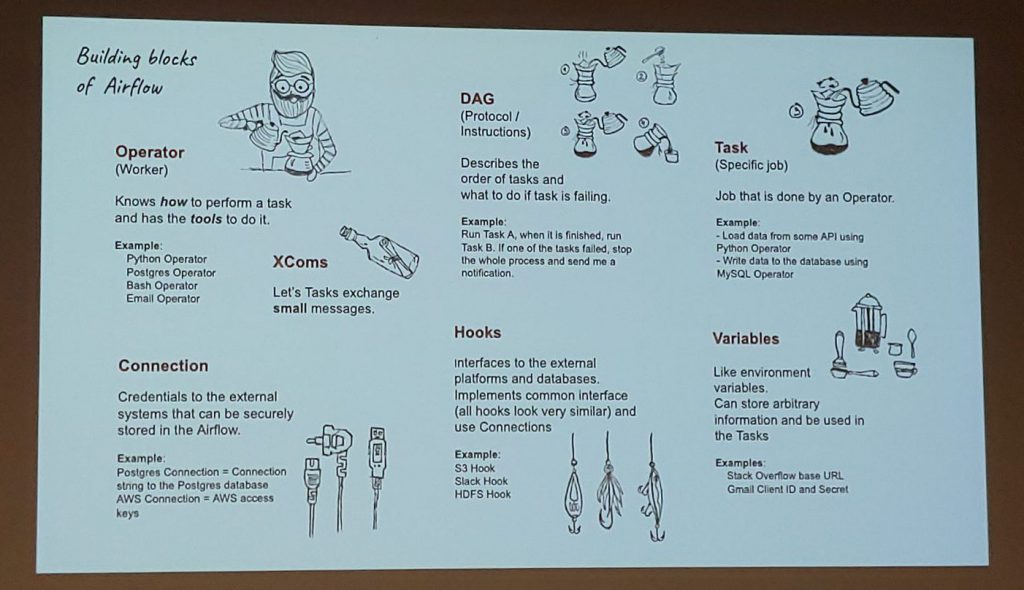

Apache Airflow for beginners

by Varya Karpenko // material

Apache Airflow is an open source project that allows you programmatically create, schedule and monitor sequences of tasks. Varya went over the basic concepts and the building blocks in Airflow, such as DAGs, Operators, Tasks, Hooks, Variables, and XComs. And she had great slides!

Production-level data pipelines that make everyone happy using Kedro

by Yetunde Dada //

Yetunde walked the audience thought the software principles data engineers and data scientists should consider applying to their code to make it easier to deploy into the production environment. Then she introduced us to an open source Python library, called Kedro, to simplify the deployment workflow.

Day 2

Airflow: your ally for automating machine learning and data pipelines

by Enrica Pasqua and Bahadir Uyarer // material

In this workshop we learned how the basic Airflow workflow works. Then they walked us through the setup of an instance for orchestrating an inference pipeline for a machine learning model. It was a really well designed and approachable tutorial based on airflow in a docker container.

Dash: Interactive Data Visualization Web Apps with no Javascript

by Dom Weldon // material

This talk gave a nice overview of Dash. Dom showed how it works and what it can be used for, before outlining some of the common problems that emerge when data scientists are let loose to produce web applications. In addition to this, the talk also outlined effective working practices to start producing cool interactive statistical web applications.

Day 3

My own Tutorial: Managing the end-to-end machine learning lifecycle with MLFlow

by Tobias Sterbak // material

I also had the opportunity to share some of my experiences with handling the machine learning lifecycle with MLflow. It was a nice experience and I liked the more hands-on way of presenting.

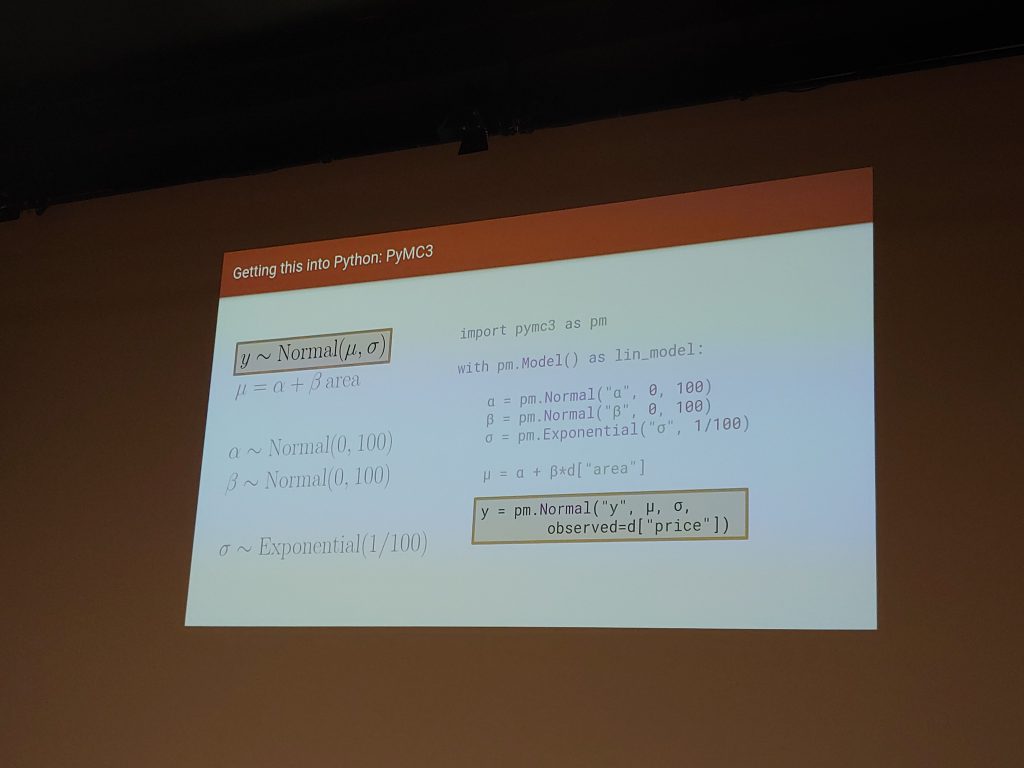

A Bayesian Workflow with PyMC and ArviZ

by Corrie Bartelheimer // material

Corrie walked the audience through a basic Bayesian workflow with PyMC3 and the model inspection with ArviZ in a real world use-case of house price modelling. I found the part about picking and designing the right prior and pitfalls with model convergence most helpful.

Wrap-up

There have been much more amazing talks I attended and too many talks I skipped for talking to great people. All in all, it was an amazing conference!

I learned a ton of new things and meet great people. I especially learned a lot of things about airflow. It's nice to see the community shifting from fancy use-cases to production-focused machine learning and data management. Also diversity and ethics where big topics. Overall a great event with an amazing community and I'm proud to be part of it. I'm already looking forward to the next PyData Conference.