This article heavily relys on "Stop Explaining Black Box Machine Learning Models for High Stakes Decisions and Use Interpretable Models Instead" by Cynthia Rudin and finally on some of my personal experiences. I will mainly focus on technical issues and leave out most of the governance and ethics related issues that derive from these. More about this can be found in Cynthia’s paper.

Nowadays, there are a lot of people talking about and advertising the methods of “Explainable AI” (XAI). Rather than trying to create models that are inherently interpretable, there has been a recent explosion of talk and usage of “Explainable ML”, where a second (post-hoc) model is created to explain the first black box model. This is problematic! The derived explanations are often not reliable, and can be misleading. We will discuss the problems with Explainable ML and show how some of those problems can be solved with interpretable machine learning.

The general issue with Explainable ML

All explanations must be wrong.

Cynthia Rudin

If the explanation was completely faithful to what the original model computes, the explanation would equal the original model, and one would not need the original model in the first place. In this case, the original model would already be interpretable.

All explanations rely on human interpretation.

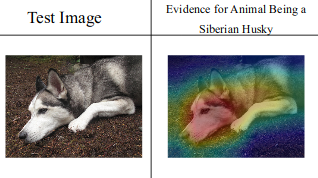

If you have an explanation to a given prediction, it’s up to you as a human to understand, interpret and reason about it. This makes it easy to use explanations to rationalize believes about the world, the data and the model itself. As humans we are primed to look for evidence for our case. So if we look at the following test image and the saliency map explanation we would find it very fitting.

But if we look at what evidence for being a “Transverse Flute” this method provides, we find it to be completely useless. The explanations look essentially the same for both classes.

The issues with explanation algorithms

There are several algorithmic approaches to explain predictions of machine learning models or specific predictions of them. There are two groups of methods.

Model-agnostic methods rely on using natural arising features to explain predictions and/or models without using any information about the model. Famous methods are LIME, Shap-values, partial dependence plots and permutation feature importance. This article discusses some of the problems that arise with LIME.

On the other hand we have model specific methods, which use internal properties like gradients or parameters of the modelling algorithm to extract explanations. Famous examples are saliency map and attention weights, but even feature importance values by random forest or regression parameters fall in this category.

All of the above methods can be used to explain the behavior and predictions of trained machine learning models. But the interpretation methods might not work well in the following cases:

- the model models interactions (or the features are unclear)

- features strongly correlate with each other

- the model does not correctly model causal relationships

- parameters of the interpretation method are not set correctly

In many application cases multiple of the above cases are in fact a problem.

What to do about it?

Since this topic is still a very active research area, we might see new results and some improvements in the future. But I guess the general problem will never be completely solved, since explanations can be arbitrarily complex and are always in need of human interpretation. In the meantime I suggest the following points.

- Be careful and critical when interpreting explanations! Never trust the method and base high-stake decisions only on a larger framework of understanding and explanations.

- Don't expose explanations to lay users or consumers of your applications. If you need to, do so carefully and do exhaustive user-testing before releasing.

- Use the techniques of explainable AI for debugging your own algorithms and data set.

- Use careful monitoring to see what your method is doing and evaluate on the long-run.

Wrap-up

We saw, what general and specific problems can arise in the context of explainable AI. Currently there is no way around them, so be careful whenever you use these methods. But nevertheless, these methods can be handy tools for both model and data set debugging and can help machine learning practitioners to understand their model and data set better.

Resources

- "Stop Explaining Black Box Machine Learning Models for High Stakes Decisions and Use Interpretable Models Instead"

- "Attention is not explanation"

- https://compstat-lmu.github.io/iml_methods_limitations/