Often in text classification, we use so called black-box classifiers. By black-box classifiers I mean a classification system where the internal workings are completely hidden from you. A famous example are deep neural nets, in text classification often recurrent or convolutional neural nets. But also linear models with a bag of words representation can be considered black-box classifiers, because nobody can fully make sense of thousands of features contributing to a prediction.

In general you start your classification task by collecting a data set. Then you extract some features and fit a model. After fitting the model you check some classification metrics like accuracy or f1-score. And if the model performs well enough you release it to production. But these metrics don’t show you the whole picture. For example, they don’t reveal dataset leakage. Especially in text classification leakage can be really subtle and hard to detect. It could mean, that a certain class of your data contains a lot of redirected emails. So the model would learn that redirect means this class. But this might not be a inherent property of the thing you want to predict, but a artifact of your data collection. To detect this kind of leakage you need a special approach to trust your model.

In this post, I’ll show you how you can use an algorithm called LIME to inspect the prediction of your black-box model. We will start with a simple implementation and then use the more sophisticated algorithm from eli5, a python package for debugging machine learning models.

The LIME algorithm

by Ribeiro et al, 2016

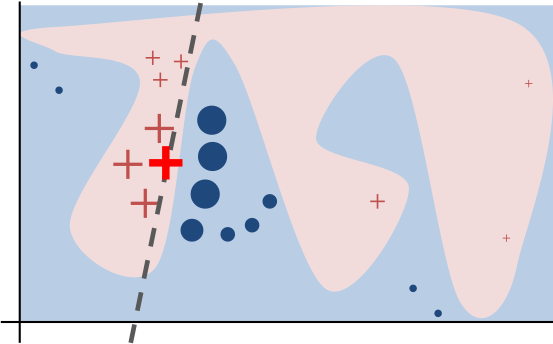

Our goal is to understand the prediction of an arbitrary model for a certain sample. The desired explanation should be local,i.e. must correspond to how the model behaves in the neighborhood of the instance being predicted. It should be interpretable, i.e. it provides qualitative understanding between the input variables and the response and must take into account the user’s limitations. Also, it should be able to explain any model, hence it should be model-agnostic. These properties give LIME (Locally Interpretable Model-agnostic Explanations) it’s name.

How it works

Let’s look at the 20newsgroups dataset. This is a dataset that contains some discussions about news articles. We can get it from scikit-learn and we only take the classes ['alt.atheism', 'soc.religion.christian', 'comp.graphics', 'sci.med'].

from sklearn.datasets import fetch_20newsgroups

categories = ['alt.atheism', 'soc.religion.christian', 'comp.graphics', 'sci.med']

twenty_train = fetch_20newsgroups(subset='train', categories=categories, shuffle=True,

random_state=42, remove=('headers', 'footers'))

twenty_test = fetch_20newsgroups(subset='test', categories=categories, shuffle=True,

random_state=42, remove=('headers', 'footers'))

i = 125

print("Class: {}".format(twenty_train.target_names[twenty_train.target[i]]))

print("-"*20); print()

sample = twenty_train.data[i]; print(sample)

Class: alt.atheism

--------------------

In article <1993Apr3.153552.4334@mac.cc.macalstr.edu>, acooper@mac.cc.macalstr.edu writes:

|> In article <1pint5$1l4@fido.asd.sgi.com>, livesey@solntze.wpd.sgi.com (Jon Livesey) writes

>

> Well, Germany was hardly the ONLY country to discriminate against the

> Jews, although it has the worst reputation because it did the best job

> of expressing a general European dislike of them. This should not turn

> into a debate on antisemitism, but you should also point out that Luther's

> antiSemitism was based on religious grounds, while Hitler's was on racial

> grounds, and Wagnmer's on aesthetic grounds. Just blanketing the whole

> group is poor analysis, even if they all are bigots.

I find these to be intriguing remarks. Could you give us a bit

more explanation here? For example, which religion is anti-semitic,

and which aesthetic?

We train a black-box classifier.

import numpy as np

from scipy.spatial import distance

from sklearn.feature_extraction.text import TfidfVectorizer, CountVectorizer

from sklearn.svm import SVC

from sklearn.linear_model import LogisticRegression

from sklearn.decomposition import TruncatedSVD

from sklearn.pipeline import Pipeline, make_pipeline

from sklearn.feature_selection import SelectKBest

from sklearn.feature_selection import chi2

As a black-box classifier we use a kernel svm with LSA features. This is clearly non-linear and hard to interpret. In a following post I’ll show you how to do it with neural networks in keras.

# LSA features

vec = TfidfVectorizer(min_df=3, stop_words='english', ngram_range=(1, 2))

svd = TruncatedSVD(n_components=100, n_iter=7, random_state=42)

lsa = make_pipeline(vec, svd)

# SVM with rbf-kernel

clf = SVC(C=150, gamma=2e-2, probability=True, kernel="rbf")

text_clf = make_pipeline(lsa, clf)

text_clf.fit(twenty_train.data, twenty_train.target)

print("Accuracy: {:.1%}".format(text_clf.score(twenty_test.data, twenty_test.target)))

Accuracy: 89.0%

Let’s explain the predictions of this model

The idea is that we generate a fake dataset of perturbed samples from the example we want to explain the prediction from.

import random

def get_perturbed_sample(sample):

'''Sample words from the text sample uniformly.'''

words = sample.split(" ")

n_words = random.randrange(0, len(words))

idx = random.sample(list(range(0,len(words))), k=n_words)

return " ".join([words[i] for i in sorted(idx)])

print(sample)

In article <1993Apr3.153552.4334@mac.cc.macalstr.edu>, acooper@mac.cc.macalstr.edu writes:

|> In article <1pint5$1l4@fido.asd.sgi.com>, livesey@solntze.wpd.sgi.com (Jon Livesey) writes

>

> Well, Germany was hardly the ONLY country to discriminate against the

> Jews, although it has the worst reputation because it did the best job

> of expressing a general European dislike of them. This should not turn

> into a debate on antisemitism, but you should also point out that Luther's

> antiSemitism was based on religious grounds, while Hitler's was on racial

> grounds, and Wagnmer's on aesthetic grounds. Just blanketing the whole

> group is poor analysis, even if they all are bigots.

I find these to be intriguing remarks. Could you give us a bit

more explanation here? For example, which religion is anti-semitic,

and which aesthetic?

Look at a perturbed sample to this instance

print(get_perturbed_sample(sample))

<1pint5$1l4@fido.asd.sgi.com>, the reputation the This

> aesthetic intriguing us is

Now get a lot of perturbed samples and predict on them

perturbed_samples = [get_perturbed_sample(sample) for i in range(5000)]

Then we use the trained black-box model to get predictions for each example in a generated dataset.

perturbed_predictions = text_clf.predict(perturbed_samples)

The next thing we have to do is to pick an interpretable representation, in our case a bag of words, a way to restrict the complexity of the explanation and a model we can interpret.

explainer = Pipeline([

("BoW", CountVectorizer()), # interpretable representation

("selectK", SelectKBest(k=10, score_func=chi2)), # limit the complexity of the explanation

("lr", LogisticRegression()) # weighted interpretable model

])

Then we compute the weights for the linear model by the exponential kernel in cosine distance function and fit the explainer model on the predictions of the text classifier

vec = CountVectorizer(binary=True)

sigma = 1.0

samples_vec = vec.fit_transform(perturbed_samples); samples_vec = samples_vec.todense()

exp_kernel_cosine = lambda x, y: np.exp(-distance.cosine(vec.transform([x]).todense()[0], y)**2 / sigma**2)

weights = np.nan_to_num([exp_kernel_cosine(sample, s) for s in samples_vec])

explainer.fit(perturbed_samples, perturbed_predictions, lr__sample_weight=weights)

Pipeline(memory=None,

steps=[('BoW', CountVectorizer(analyzer='word', binary=False, decode_error='strict',

dtype=<class 'numpy.int64'>, encoding='utf-8', input='content',

lowercase=True, max_df=1.0, max_features=None, min_df=1,

ngram_range=(1, 1), preprocessor=None, stop_words=None,

strip_...ty='l2', random_state=None, solver='liblinear', tol=0.0001,

verbose=0, warm_start=False))])

How well does it approximate the black-box model?

new_pert_samples = [get_perturbed_sample(sample) for i in range(1000)]

print("Score: {:.1%}".format(explainer.score(new_pert_samples, text_clf.predict(new_pert_samples))))

Score: 89.7%

Now we explain the original example through parameters of the white-box model. First get the important features/words from our explainer model…

inv_vocab = {v: k for k, v in explainer.steps[0][1].vocabulary_.items()}

important_words = [inv_vocab[i] for i in explainer.steps[1][1].pvalues_.argsort()[:10][::-1]]

… and then we look at the explanation .

p = explainer.predict_proba([sample])[0]

for c in explainer.steps[2][1].classes_:

print("> {}".format(twenty_train.target_names[c]))

print(f"probability: {p[c]:.1%}"); print("-"*20)

for w, v in zip(important_words, explainer.steps[2][1].coef_[c]):

print(f"{w}: {v:.3}")

print()

> alt.atheism

probability: 100.0%

--------------------

which: 0.977

article: 2.07

writes: 0.284

was: 2.12

grounds: 0.162

livesey: 2.07

sgi: 0.269

com: 0.193

on: 0.336

the: 1.52

> comp.graphics

probability: 0.0%

--------------------

which: -0.906

article: -1.66

writes: -0.362

was: -1.22

grounds: -0.0914

livesey: -1.66

sgi: -0.114

com: -0.0423

on: -0.1

the: -1.26

> sci.med

probability: 0.0%

--------------------

which: 0.199

article: -1.49

writes: 0.211

was: -2.29

grounds: -0.163

livesey: -1.49

sgi: -0.347

com: -0.642

on: -0.545

the: -0.489

> soc.religion.christian

probability: 0.0%

--------------------

which: -1.33

article: -1.31

writes: -0.247

was: -1.81

grounds: -0.0721

livesey: -1.31

sgi: -0.14

com: 0.224

on: -0.148

the: -1.08

print(sample)

In article <1993Apr3.153552.4334@mac.cc.macalstr.edu>, acooper@mac.cc.macalstr.edu writes:

|> In article <1pint5$1l4@fido.asd.sgi.com>, livesey@solntze.wpd.sgi.com (Jon Livesey) writes

>

> Well, Germany was hardly the ONLY country to discriminate against the

> Jews, although it has the worst reputation because it did the best job

> of expressing a general European dislike of them. This should not turn

> into a debate on antisemitism, but you should also point out that Luther's

> antiSemitism was based on religious grounds, while Hitler's was on racial

> grounds, and Wagnmer's on aesthetic grounds. Just blanketing the whole

> group is poor analysis, even if they all are bigots.

I find these to be intriguing remarks. Could you give us a bit

more explanation here? For example, which religion is anti-semitic,

and which aesthetic?

We see, that tokens like macalstr or livesey are pretty important but are domain or person names. Clearly we want our model not to associate atheism with the names of some people that commented in some forum.

Let’s look at eli5

The package eli5 provides a bunch handy tools to inspect your machine learning models. You can get the python package here: https://github.com/TeamHG-Memex/eli5. The TextExplainer class of eli5 provides the following explanation for our sample using the LIME algorithm.

import eli5

from eli5.lime import TextExplainer

te = TextExplainer(random_state=42)

te.fit(sample, text_clf.predict_proba)

te.show_prediction(target_names=twenty_train.target_names)

y=alt.atheism (probability 1.000, score 9.022) top features

| Contribution? | Feature |

|---|---|

| +9.548 | Highlighted in text (sum) |

| -0.526 | <BIAS> |

in article <1993apr3.153552.4334@mac.cc.macalstr.edu>, acooper@mac.cc.macalstr.edu writes: |> in article <1pint5$1l4@fido.asd.sgi.com>, livesey@solntze.wpd.sgi.com (jon livesey) writes > > well, germany was hardly the only country to discriminate against the > jews, although it has the worst reputation because it did the best job > of expressing a general european dislike of them. this should not turn > into a debate on antisemitism, but you should also point out that luther's > antisemitism was based on religious grounds, while hitler's was on racial > grounds, and wagnmer's on aesthetic grounds. just blanketing the whole > group is poor analysis, even if they all are bigots.

i find these to be intriguing remarks. could you give us a bit more explanation here? for example, which religion is anti-semitic, and which aesthetic?

y=comp.graphics(probability 0.000, score -8.065) top features

| Contribution? | Feature |

|---|---|

| +0.013 | <BIAS> |

| -8.077 | Highlighted in text (sum) |

in article <1993apr3.153552.4334@mac.cc.macalstr.edu>, acooper@mac.cc.macalstr.edu writes: |> in article <1pint5$1l4@fido.asd.sgi.com>, livesey@solntze.wpd.sgi.com (jon livesey) writes > > well, germany was hardly the only country to discriminate against the > jews, although it has the worst reputation because it did the best job > of expressing a general european dislike of them. this should not turn > into a debate on antisemitism, but you should also point out that luther's > antisemitism was based on religious grounds, while hitler's was on racial > grounds, and wagnmer's on aesthetic grounds. just blanketing the whole > group is poor analysis, even if they all are bigots.

i find these to be intriguing remarks. could you give us a bit more explanation here? for example, which religion is anti-semitic, and which aesthetic?

y=sci.med (probability 0.000, score -12.561) top features

| Contribution? | Feature |

|---|---|

| -0.189 | <BIAS> |

| -12.372 | Highlighted in text (sum) |

in article <1993apr3.153552.4334@mac.cc.macalstr.edu>, acooper@mac.cc.macalstr.edu writes: |> in article <1pint5$1l4@fido.asd.sgi.com>, livesey@solntze.wpd.sgi.com (jon livesey) writes > > well, germany was hardly the only country to discriminate against the > jews, although it has the worst reputation because it did the best job > of expressing a general european dislike of them. this should not turn > into a debate on antisemitism, but you should also point out that luther's > antisemitism was based on religious grounds, while hitler's was on racial > grounds, and wagnmer's on aesthetic grounds. just blanketing the whole > group is poor analysis, even if they all are bigots.

i find these to be intriguing remarks. could you give us a bit more explanation here? for example, which religion is anti-semitic, and which aesthetic?

y=soc.religion.christian (probability 0.000, score -9.400) top features

| Contribution? | Feature |

|---|---|

| -0.218 | <BIAS> |

| -9.182 | Highlighted in text (sum) |

in article <1993apr3.153552.4334@mac.cc.macalstr.edu>, acooper@mac.cc.macalstr.edu writes: |> in article <1pint5$1l4@fido.asd.sgi.com>, livesey@solntze.wpd.sgi.com (jon livesey) writes > > well, germany was hardly the only country to discriminate against the > jews, although it has the worst reputation because it did the best job > of expressing a general european dislike of them. this should not turn > into a debate on antisemitism, but you should also point out that luther's > antisemitism was based on religious grounds, while hitler's was on racial > grounds, and wagnmer's on aesthetic grounds. just blanketing the whole > group is poor analysis, even if they all are bigots.

i find these to be intriguing remarks. could you give us a bit more explanation here? for example, which religion is anti-semitic, and which aesthetic?

How to trick the algorithm

Even tough the explanations by LIME may look nice you should never trust an algorithm blindly! The algorithm cannot provide a good explanation for a black-box classifier which works on character level or uses features that are not directly related to tokens, depending on the interpretable representation chosen. So Black-box classifiers which use features like “text length” (not directly related to tokens) can be also hard to approximate using the default bag-of-words/ngrams model.

def predict_proba_len(docs):

proba = [

[0, 1.0, 0.0, 0] if len(doc) % 2 else [1.0, 0, 0, 0]

for doc in docs

]

return np.array(proba)

len(sample)

850

te2 = TextExplainer().fit(sample, predict_proba_len)

te2.show_prediction(target_names=twenty_train.target_names)

y=alt.atheism (probability 0.561, score -0.246) top features

| Contribution? | Feature |

|---|---|

| +0.157 | Highlighted in text (sum) |

| +0.089 | <BIAS> |

in article <1993apr3.153552.4334@mac.cc.macalstr.edu>, acooper@mac.cc.macalstr.edu writes: |> in article <1pint5$1l4@fido.asd.sgi.com>, livesey@solntze.wpd.sgi.com (jon livesey) writes > > well, germany was hardly the only country to discriminate against the > jews, although it has the worst reputation because it did the best job > of expressing a general european dislike of them. this should not turn > into a debate on antisemitism, but you should also point out that luther's > antisemitism was based on religious grounds, while hitler's was on racial > grounds, and wagnmer's on aesthetic grounds. just blanketing the whole > group is poor analysis, even if they all are bigots.

i find these to be intriguing remarks. could you give us a bit more explanation here? for example, which religion is anti-semitic, and which aesthetic?

We can detect this failure by looking at metrics:

te2.metrics_

{'mean_KL_divergence': 0.72275857543317168, 'score': 0.52407445323048907}

Luckily it’s possible to fix this.

If we suspect that the fact document length is even or odd is important, it is possible to customize TextExplainer to check this hypothesis.

from sklearn.pipeline import make_union

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.base import TransformerMixin

class DocLength(TransformerMixin):

def fit(self, X, y=None):

return self

def transform(self, X):

return [[len(doc) % 2, not len(doc) % 2] for doc in X]

def get_feature_names(self):

return ['is_odd', 'is_even']

vec = make_union(DocLength(), CountVectorizer(ngram_range=(1,2)))

te3 = TextExplainer(vec=vec).fit(sample, predict_proba_len)

print(te3.metrics_)

te3.explain_prediction(target_names=twenty_train.target_names)

{'mean_KL_divergence': 0.01184538208340986, 'score': 1.0}

y=alt.atheism (probability 0.988, score -4.426) top features

| Contribution? | Feature |

|---|---|

| +4.419 | doclength__is_even |

| +0.022 | countvectorizer: Highlighted in text (sum) |

| -0.016 | <BIAS> |

countvectorizer: in article <1993apr3.153552.4334@mac.cc.macalstr.edu>, acooper@mac.cc.macalstr.edu writes: |> in article <1pint5$1l4@fido.asd.sgi.com>, livesey@solntze.wpd.sgi.com (jon livesey) writes > > well, germany was hardly the only country to discriminate against the > jews, although it has the worst reputation because it did the best job > of expressing a general european dislike of them. this should not turn > into a debate on antisemitism, but you should also point out that luther's > antisemitism was based on religious grounds, while hitler's was on racial > grounds, and wagnmer's on aesthetic grounds. just blanketing the whole > group is poor analysis, even if they all are bigots. i find these to be intriguing remarks. could you give us a bit more explanation here? for example, which religion is anti-semitic, and which aesthetic?

What’s bad about this kind of failure (wrong assumption about the black-box pipeline) is that it could be impossible to detect the failure by looking at the scores. Scores could be high because generated dataset is not diverse enough, not because our approximation is good. The takeaway is that it is important to understand the “lenses” you’re looking through when using LIME to explain a prediction.

That’s it for now, I hope you learned something. Next time I show you, how you can use the TextExplainer of eli5 with neural networks from keras or you can have look at shaply values, a different method to explain machine learning models. Stay curious!